Visualising car accidents around Melbourne, VIC using Kepler.gl

Data Visualisation with kepler.gl

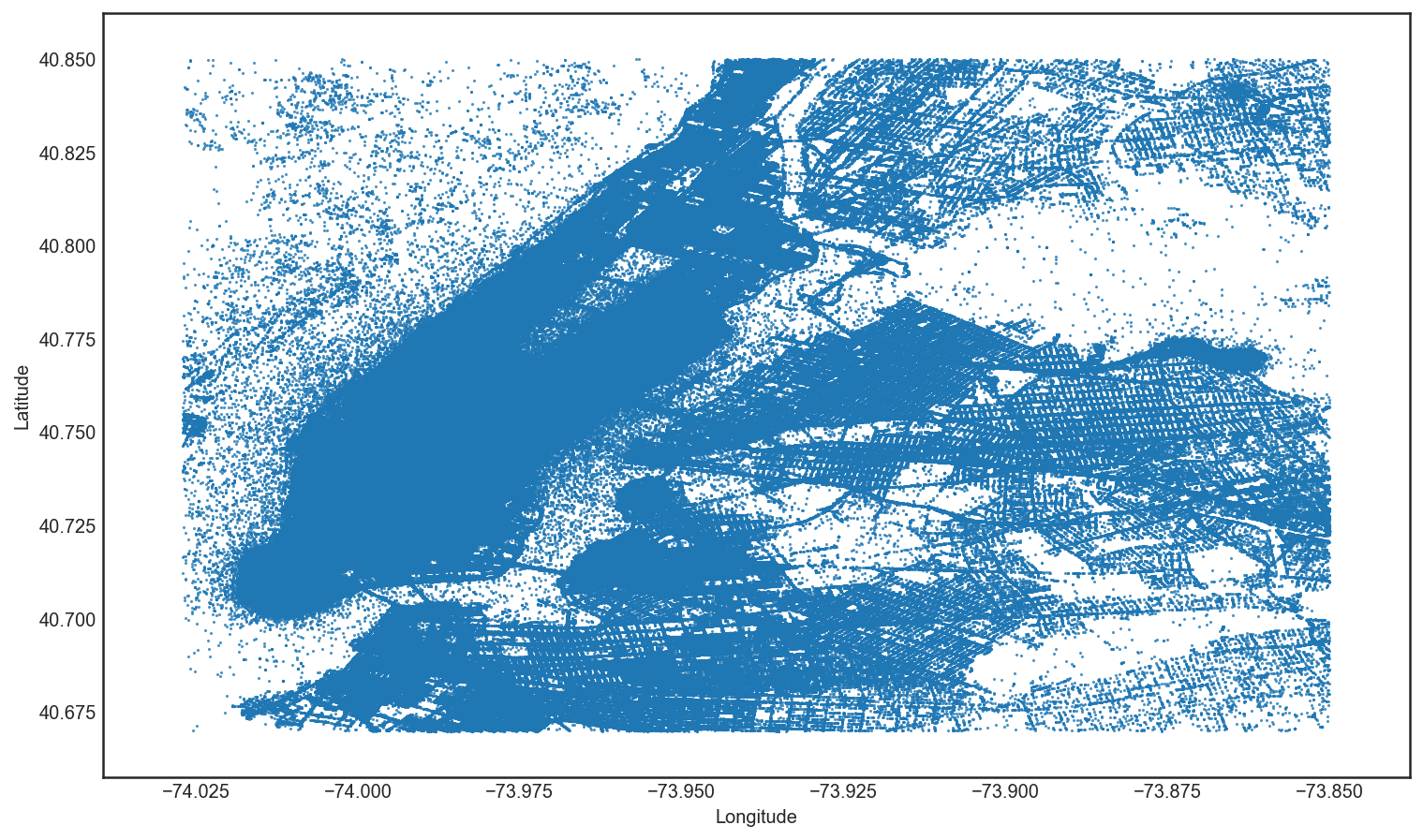

Using Uber’s recently released geospatial visualisation tool Kepler, we are able to generate beautiful map based visualisations, such as the chart below which shows traffic accidents involving pedestrians in Melbourne, Australia over the past 4 years.